A Simple Guide to Measuring the Product-Market Fit of Your Product or Feature

It’s amazing that there is a huge amount of discussion on the importance of hitting product market fit for what you are building. Interestingly, there isn’t as much about how to measure when you actually have product market fit for what you have built.

The reason that’s the case, is because partially it’s a question that you shouldn’t have to ask:

“If you have to ask whether you have Product/Market Fit, the answer is simple: you don’t.” – Eric Ries

And I can see where Eric is coming from with this. Sometimes we lose track of our customers and instead focus on terms, such as “product/market fit” and if we were in closer touch with our customers we would know whether we have it or not. Granted, that’s just my own personal interpretation of his quote.

The product market fit survey and how to use it properly

What questions should I ask my users/customers?

The best way I’ve found to measure product market fit is with a survey that Sean Ellis developed, which asks a number of questions, with the most important one being “How disappointed would you be if you could no longer use this product?”:

He even built a tool for this called survey.io that you can use to easily run the survey on your customer base. It features a few questions, but none are as helpful as the “how would you feel?” question.

How many people do I need for conclusive results?

Hiten Shah helped us a huge amount on this to figure this out and his best piece of advice was that you don’t need more than 40-50 responses for your results to carry significance. Of course, the more you get, the better, but a sample of 40-50 is usually enough.

Who should I send the survey to so as to not skew results?

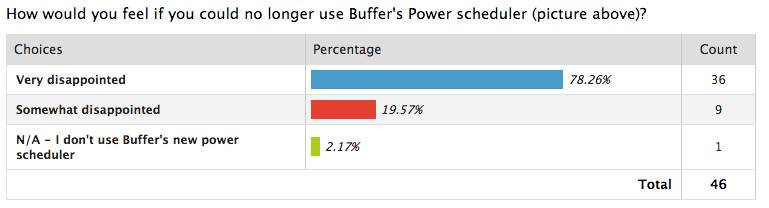

We’re currently building something new at Buffer that we call the “Power Scheduler”. We had it in beta for a few months now and generally the usage from our metrics for it was good, but we couldn’t really tell how useful it really was. So, I picked out the top 200 people currently using the Power Scheduler and this was the result:

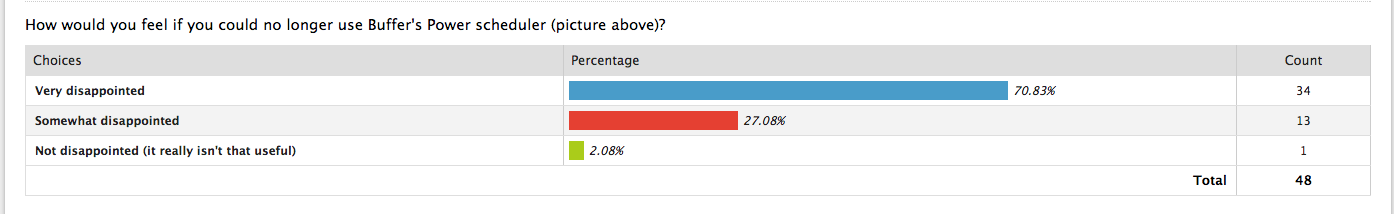

Now, of course, sending something to your most engaged audience gives you a very skewed result and a few people in our team questioned whether that was the right approach. With the help of Sean Ellis,, the man himself, who also built another very helpful surveying tool called Qualaroo that we use a lot here at Buffer we refined who we would survey. He suggested this:

I generally recommend to survey the following:

– People that have experienced the core of your product offering

– People that have used your product at least twice

– People that have used your product in the last two weeks

When we ran the survey again with Sean Ellis and Hiten’s (Hiten built CrazyEgg and KISSmetrics and learnt tons of things from scaling both companies in this regard) help, we still got a very promising result, although less stark as the one above:

How can I use this with features instead of products?

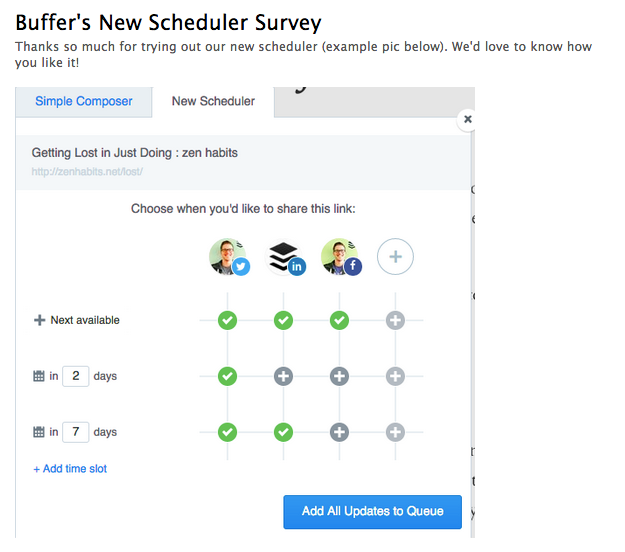

I’m a big fan of using this for new features you are building, when your main product already has product/market fit. The way I found to make this very clear is to include an image of the feature you’re talking about in the survey, like in this example:

Why this survey might get even more important as your product grows

One interesting problem we ran into at Buffer – which is definitely an incredible problem to have! – is the following. It almost didn’t matter what new feature or product we would put live, we would be able to get press and buzz around it. It can feel like everything you’re building is a success, when it really isn’t, because you’re not monitoring your key metrics closely.

We had this happen with our Daily app launch for example. We saw great PR, tons of votes on ProductHunt and much encouragement from our community. What we didn’t look at was whether people were coming back to use the product (they weren’t!), so we fooled ourselves for quite some time before we realized what was happening.

So being laser focused on whether you’re actually building something useful, after you already have product market fit, is incredibly important. We now try and separate marketing and product launch as much as we can, keeping a new feature or product in beta for as long until we know this is truly providing value to our customers.

That’s where the product market fit survey comes in. We’ve started to use it on multiple occasions, like in the example above, to know whether something is working, before the general public even knows about it.

Staying disciplined and not getting carried away by the buzz (something I’ve fallen prey to many times!) as you grow I believe is the key to keep building great things for your customers.

Image source: Unsplash

Try Buffer for free

140,000+ small businesses like yours use Buffer to build their brand on social media every month

Get started nowRelated Articles

How the Buffer Customer Advocacy Team set up their book club, plus their key takeaways from their first read: Unreasonable Hospitality by Will Guidara.

In this article, the Buffer Content team shares exactly how and where we use AI in our work.

Here we go again. If you work in social media, it’s nothing new to adapt and change your strategy based on the ever-changing algorithms and the rise and fall of social networks. (Who else was on Vine? 🙋🏻♀️) But, of course, we wish you didn’t have to. The latest wave for social media marketers and creators is that TikTok might be banned in the U.S. The short-form video app has become one of the most widely-used social media platforms and is credited with impacting trends and cultural shifts.