For the past year, my team and I have been building a brand new social analytics solution within Buffer called Buffer Analyze. We’ve done our best to distill research, data, and intuition into a lean, lovable solution, and we’ve been fortunate to find early signs of product/market fit.

(Currently, the Analyze beta is open to Buffer for Business customers. You can learn more about Analyze’s social analytics features here.)

There is so much we want to do with Analyze because there are so many things we’re convinced will deliver value. At the same time, we’re tightly constrained by our resources as a very small team within Buffer.

To be honest, I wouldn’t have it any other way. Tight constraints force a creative, disciplined and critical approach to product design and development.

The product is the result of managing a delicate balance of tradeoffs.

I’m excited to share more about these tradeoffs: how we work within constraints, how we scope new product features, and how we build and release value for customers. Keep reading to learn more, and feel free to ask any questions in the comments or drop me a note on Twitter.

Before we scope: Dream big, then go small

When it comes to implementing product features, I usually dream big then go small.

Once I think I’ve identified our biggest opportunities (a process worthy of its own post!), I’ll get together with our Analyze designer, James, to review several potential solutions. Eventually we’ll land on a design we think hits the mark, we’ll flesh out many of the details, and we’ll prototype relevant workflows. We also always include the engineers in this process for early feedback on the design and ideas.

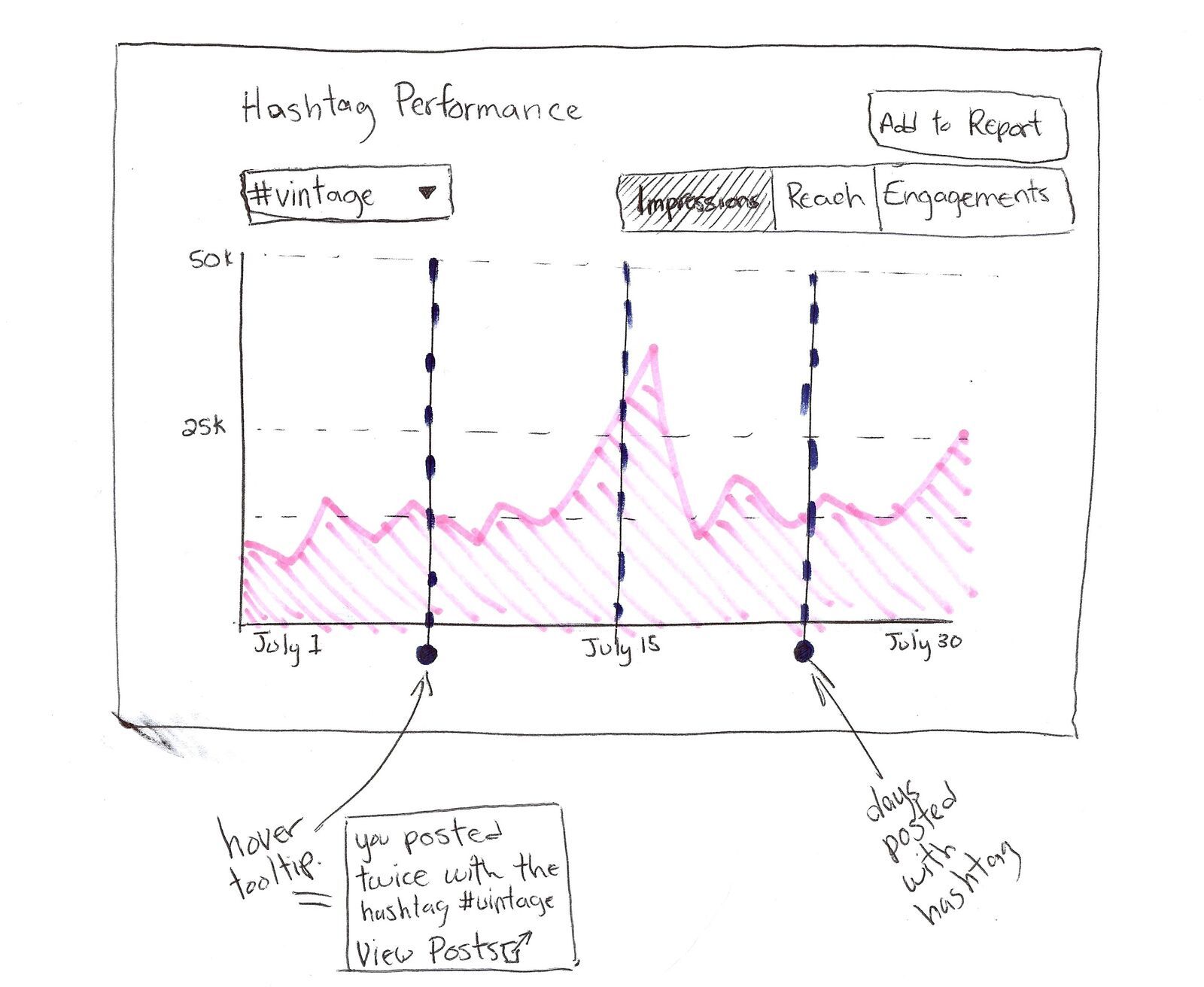

For example, this is one solution we explored for our new hashtag analysis tool. We ended up building something very different!

The result of this exploration is usually a clickable prototype, along with a detailed specification — we call them “specs” for short — of the choices we made along the way. (Our specs are non-traditional: instead of a long, highly-technical, structured document, we write ours in natural language with well-reasoned breakdowns of our proposed solution.)

The design and spec often represent the ideal, if-we-could-do-it-all version of the feature.

But, of course, we can’t do it all… nor should we!

In a small team with limited resources and high opportunity costs, it is in our users’ best interest for us to optimize for value instead of completeness.

Many times, feature completeness doesn’t directly service the core job to be done but instead provides convenience. Convenience isn’t without value itself, but when a product is at a very early stage, finding product/market fit by delivering core value is paramount. Validating that you’re building the right thing takes precedence above all else.

So, with our fully-featured and convenience-laden prototype, it’s time to step back and take stock of the design.

Do we need to build all of this?

Almost all of the time the answer is no; we do not need all of this to deliver the core value.

Before we actually build a feature, we go through three stages of scoping:

- Product scoping

- Technical scoping

- Cycle scoping

Time to get our scope on!

Step One: Product scoping and user stories

During the product scoping phase, product managers and designers work together to design the feature or features we want to tackle in an upcoming development cycle. What we end up with is a specific, fully-featured, if-we-could-do-it-all version of the feature. This is almost always larger in scope than what we’ll initially build.

Once we’ve got our feature designed, we’ll explicitly take time to scope-down and identify the minimum lovable version, which is the smallest version of the product that still delivers material value to the user.

Anything smaller than the minimum lovable version just doesn’t get the job done and therefore isn’t worth building.

Quick tip!

You can almost always ship a smaller version than what you first think! Push yourself to go small in the spirit of shipping quickly and learning sooner.

Case in point: When I was scoping the minimum version of Analyze, my research and intuition both told me we needed a simple comparison tool as a baseline, minimum feature. I was convinced nobody would pay for our product without it, but I was wrong. The comparison feature took a little longer to build than we planned for, and we decided to ship Analyze without it and see what happened. Lo and behold, we earned our first happy, paying customers even without the comparison tool!

This was an excellent opportunity to test my hypothesis that people needed the comparison tool to get enough value, and that hypothesis was happily invalidated. This experience instilled in me a desire to deeply question every “must-have” aspect of a new product or feature.

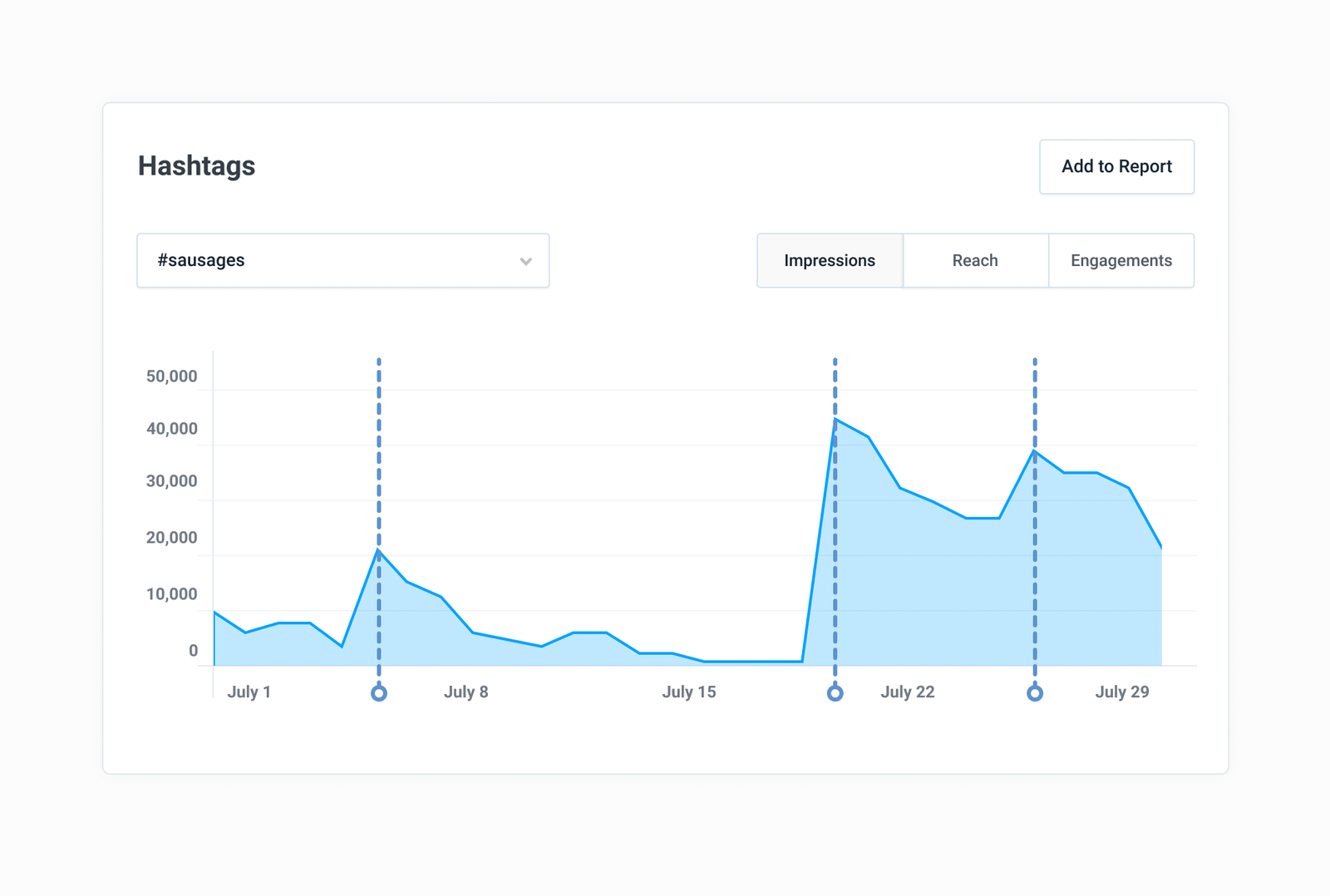

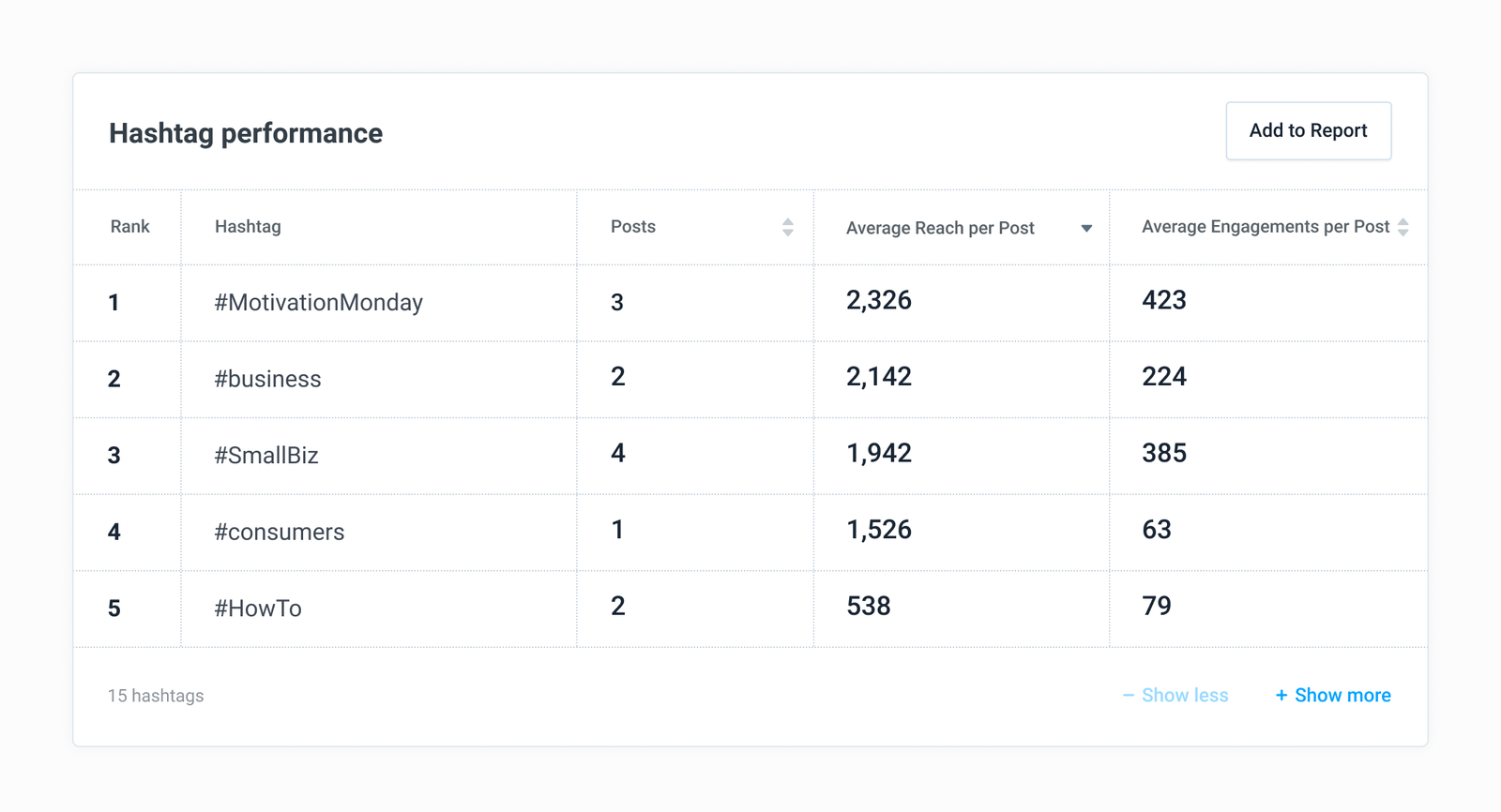

For example, we discovered that users could get a lot of value from better understanding how their hashtags affect reach and engagement. After several design explorations, we arrived at this full-featured, if-we-could-do-it-all version of our Hashtag Analysis module:

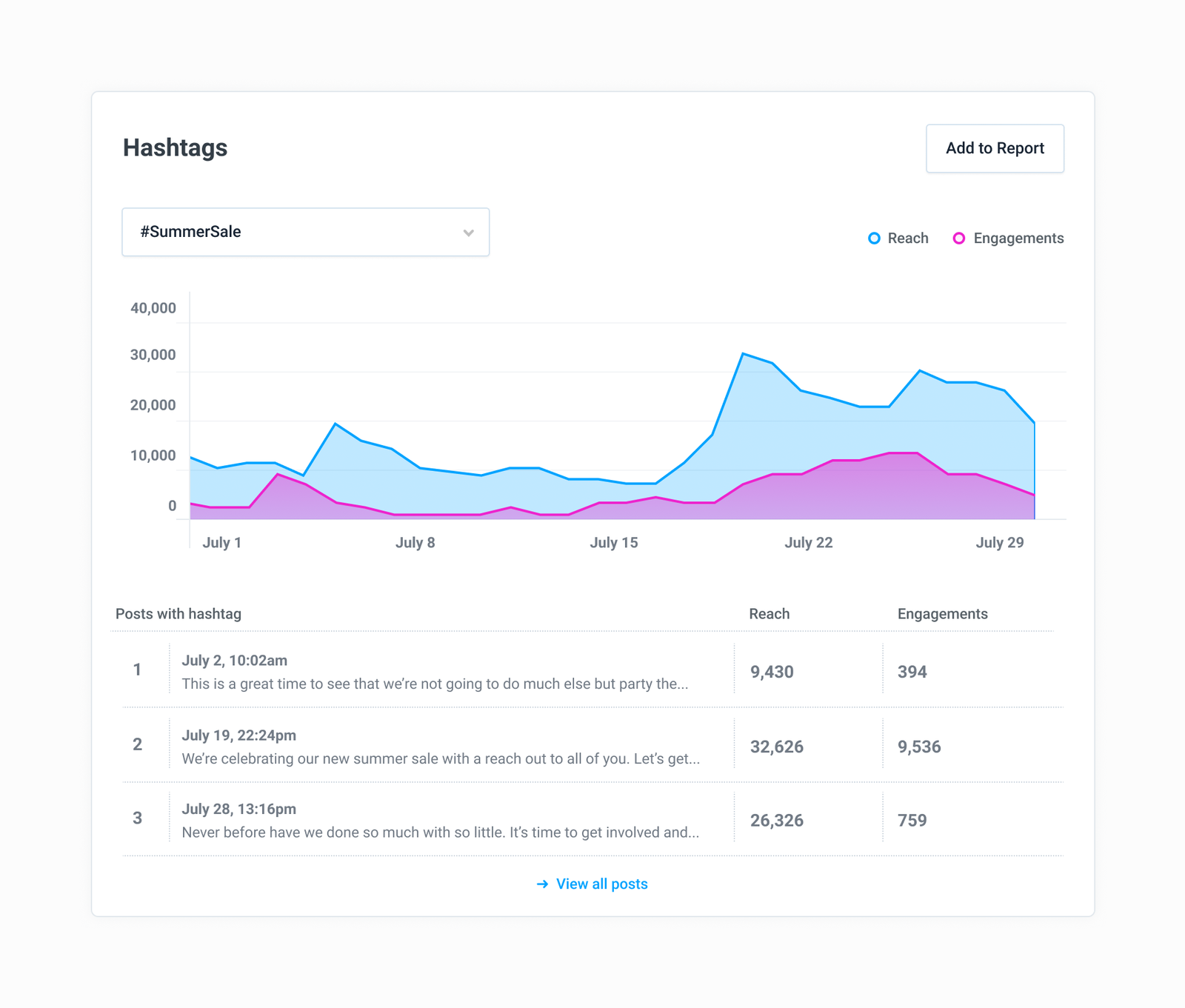

And then below is what we actually built.

Note how the scope is drastically smaller but the value it delivers is almost equal. I’m a huge believer in the 80/20 rule and it applies here: The minimal version of this feature is 20 percent the size of the initial scope, yet it delivers 80 percent of the intended value. This is more than enough value to be sufficient to solve our users’ challenge (understand hashtag performance). This is a good tradeoff in the world of product development.

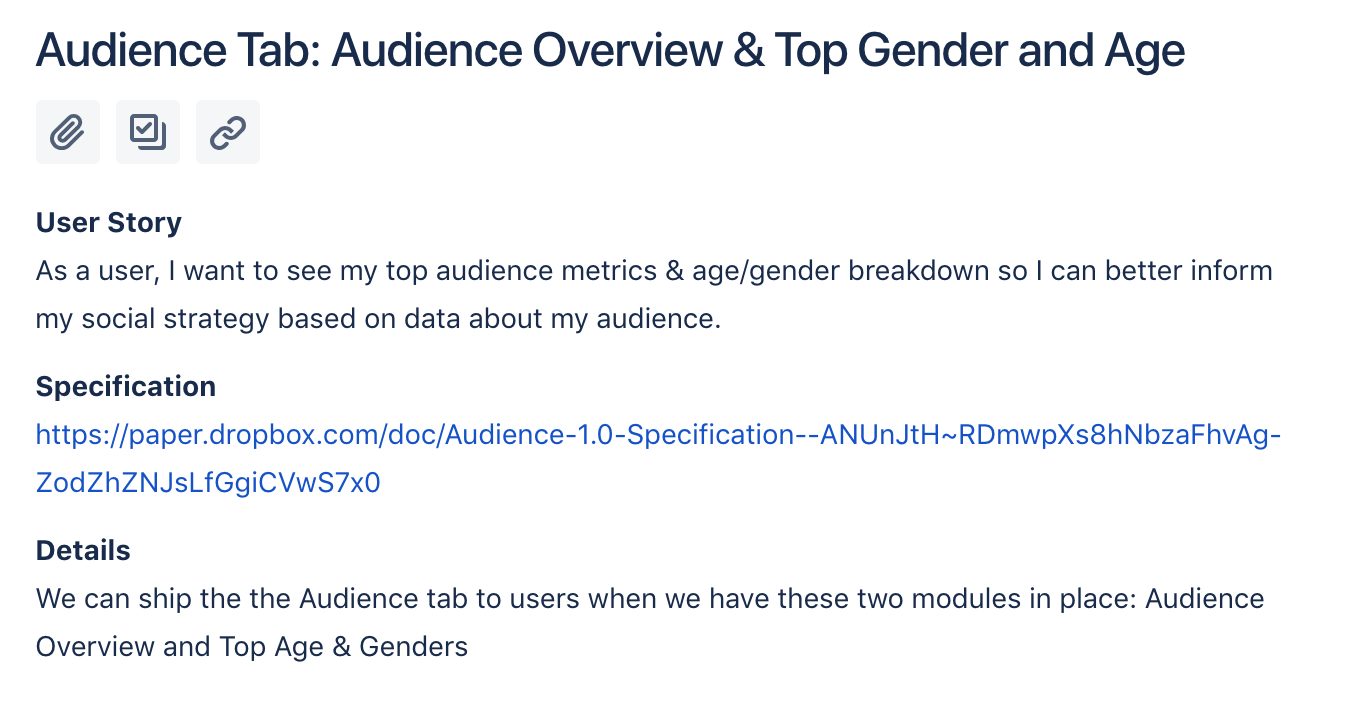

Once we’ve got our fully-featured solution specified, I’ll break it into user stories.

A user story is a discrete chunk of user-facing value. We use the following format:

“As a <type of user>, I want <some kind of goal>, so I can <some reason>”

and then attach designs, details, and acceptance criteria.

Sometimes one story is enough to represent a whole feature, but more often a feature will end up as several user stories: each a discrete, shippable chunk of value.

We then scale down our complete list of user stories to a smaller subset, which will represent our minimum lovable version. For example, a prototype might result in five user stories, but our minimum version will be only two of those five.

Step Two: Technical scoping and estimates

With designs at the ready, product specification in hand, and our user stories pared down and ready to go, we meet as a whole team and walk through the user stories together. The engineers often ask clarifying questions, and we’ll tweak the details of our stories together as required.

Once the engineers are satisfied that they have a full understanding of the problem and our proposed solution, they’ll break the user stories into engineering tasks.

The engineering tasks are discrete tasks that are estimated with an intentionally coarse set of possible estimates: either one day, three days, or one week. Anything longer than one week of estimated work should get broken down further.

Estimates are derived cooperatively by the engineers and serve two primary purposes:

- Ensure all engineers are on roughly on the same page in terms of effort required for each task

- Provide the product manager with a general gut check for cycle planning.

Estimates are not used as a measure of accountability for the engineers. We only want to know that we’re half-way decent at estimating engineering tasks (a notoriously difficult thing to do well!), and that we all agree on what the tasks entail.

Step three: Cycle scoping and planning

Now that we have a list of user stories and the engineering tasks that represent their reality, I use this information to plan our development cycle. We’re currently working in six-week development cycles, but I’ll only aim to plan the first four weeks at the outset. In week three of the cycle, I’ll take stock of where we are, re-prioritize things, and plan the second half of the cycle.

With the engineering tasks in place, I’m able to prioritize our user stories in the context of available time. We’ll often work on two or even three features at a time, and the estimates will help me decide which should come first.

For example, if we have three users stories, I might still opt to build the “least valuable” one first if it takes significantly less time than the others. This gets value to our users sooner.

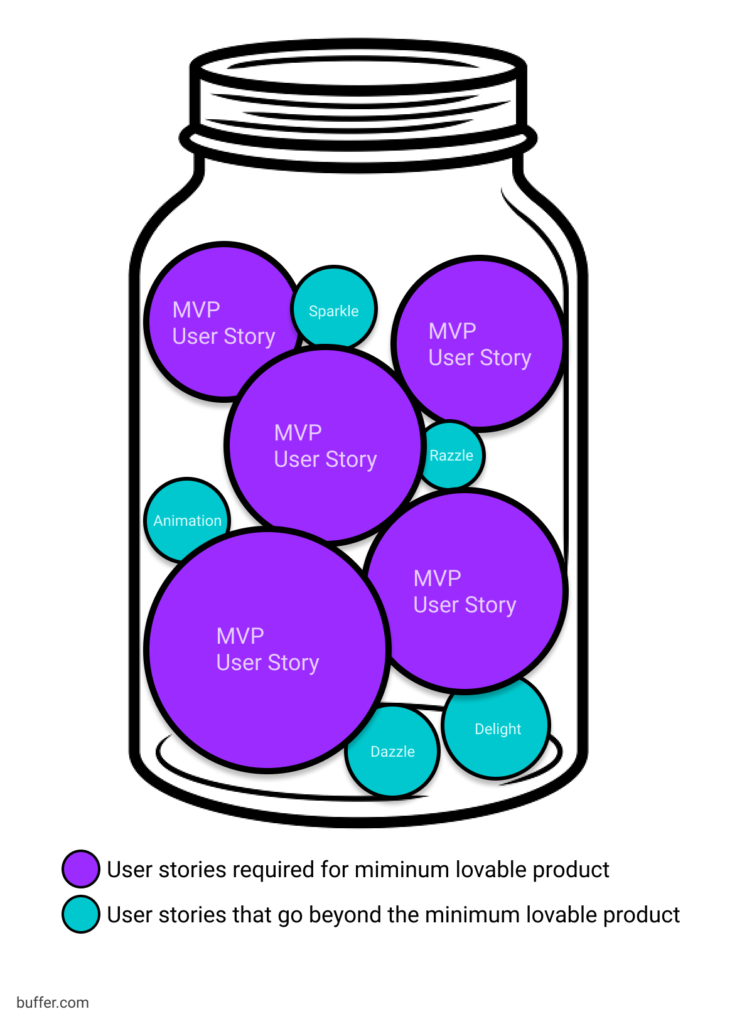

And—please bear with me—I will also “scope-up” some stories beyond our minimum lovable version.

I know, I know! I’ve been preaching the virtues of scoping down and then scoping down some more.

But imagine a jar that can fit five rocks. Even though you can’t fit a sixth rock, there’s still space between the five rocks, and maybe we can fit 10 pebbles in that space.

In this analogy, the jar is our six-week cycle, the rocks are the user stories required to fulfill our minimum lovable feature requirements, and the pebbles are smaller user stories or engineering tasks that go beyond our minimum requirements. It’s a great way to fit in a few “wow factor” aspects to a feature or product that aren’t strictly required to deliver value.

At the end of it all, cycle scoping is largely a matter of balancing getting value to the user sooner and delivering the most value (sometimes you get lucky and these things are the same!). This balance informs both what we build and when we build it.

Let’s recap

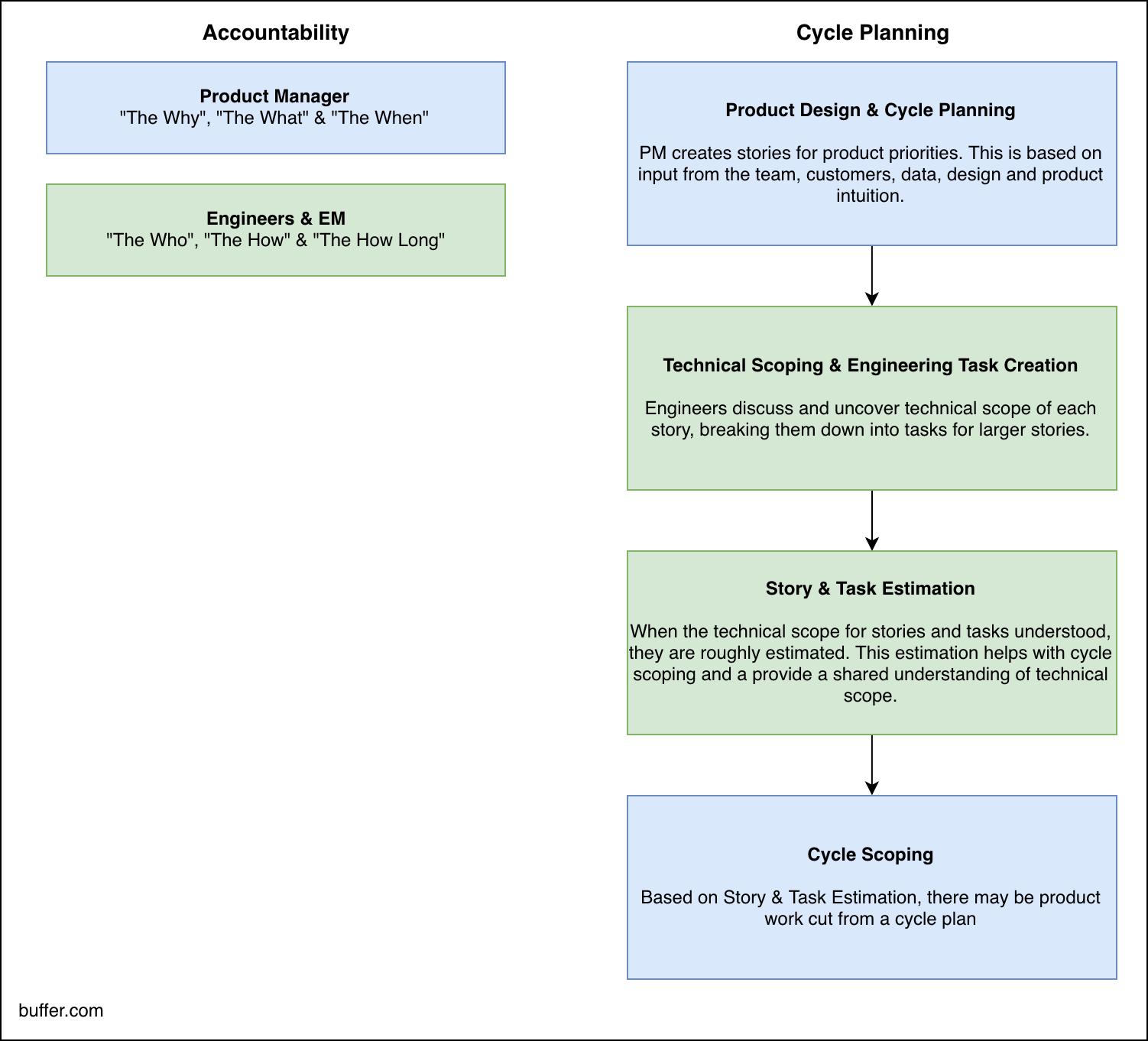

Product scoping (Product manager is accountable)

- Design your solution to the problem at hand

- Create a specification breakdown for the solution

- Turn this specification into user stories

- Go small: Define your minimum version of this feature that delivers value

Technical Scoping (Engineering manager is accountable)

- Get full team alignment and understanding on the specification and user stories

- Break user stories into technical tasks

- Estimate technical tasks

Cycle Scoping (Product manager is accountable)

- Based on estimates, select which stories and tasks will get completed in the current development cycle

- Leave out all the rest for another day

In case you’re curious on who’s accountable for what in this scoping process, it looks like this:

Over to you

This process is the result of many iterations and it continues to evolve over time. It’s by no means the best approach to product development, but we’ve found it effective in our specific product development cycle. That said, we’re always actively looking for ways to improve our process and make it more comfortable and efficient for the team.

If this triggers any questions or thoughts, please do not hesitate to leave a comment below or tweet at me. I’d be thrilled to hear from you!

I wrote a bit on how we scope and develop products at Buffer. Happy to answer any questions it brings up!

"Tight constraints force a creative, disciplined and critical approach to product design and development."

https://t.co/YKENXhxBYE

— Tom Redman (@redman) October 15, 2018

Try Buffer for free

140,000+ small businesses like yours use Buffer to build their brand on social media every month

Get started nowRelated Articles

TikTok's parent company must divest the app or face a ban in the U.S. Here's everything we know, plus how to plan ahead.

How the Buffer Customer Advocacy Team set up their book club, plus their key takeaways from their first read: Unreasonable Hospitality by Will Guidara.

In this article, the Buffer Content team shares exactly how and where we use AI in our work.